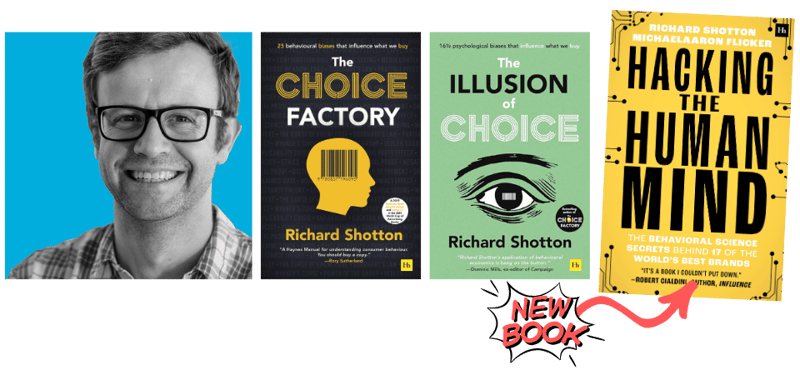

Book of the Month: Hacking the Human Mind

Why do people happily pay over the odds for bottled water, queue patiently for a pint of Guinness, or go back to Amazon again and again without really shopping around?

Why do people happily pay over the odds for bottled water, queue patiently for a pint of Guinness, or go back to Amazon again and again without really shopping around?