The idea of artificial humanity and intelligence dates back to antiquity, fascinating hundreds of societies and millions of people for thousands of years. And now we use it to create burger-flipping robots. We truly do live in the future.

But AI is not just about silver metal men. It's far more important to understand that AI is all about the nature of intelligent thought and action, through using computers as experimental devices. So it's no surprise that AI, as a field of study, is not just restrained to computer science.

This means big-wigs from engineering (such as cybernetics), biology (neural networks), experimental psychology (communication theory and game theory), mathematics and statistics, logic and philosophy, and even linguistics have come together. Of course, you can't let the robot use the wrong form of "their".

The excitement around "intelligent machines" ramped up around the 18th and 19th centuries, with the emergence of chess-playing machines such as "the Turk". These inventions were exhibited as such and even fooled people into believing the machines were playing autonomously.

But only in the late half of the 20th century have we had computers, devices, and programming languages powerful enough to achieve what our ancestors imagined. These new advances have allowed us to build experimental tests of ideas about what intelligence even is. But let's start right at the beginning, with:

Between 380 BC and the late 1600s.

Back in the day, plenty of mathematicians, theologians, essayists, authors, and philosophers considered the idea of artificial intelligence in a more rudimentary way. From mechanical techniques to calculating machines, to numeral systems, these intellectuals considered the concept of automatic thought in non-human forms.

The early 1700s

The idea of all-knowing machines captured the curiosity of many literary greats during this time. Jonathan Swift’s novel “Gulliver’s Travels” mentioned a device called the engine, which is one of the earliest references to a computer. The device was meant to improve knowledge and mechanical operations so everyone, including the most incompetent, could seem skilled. All thanks to the assistance of a non-human mind.

1939

Physicist and Inventor John Vincent Atanasoff, and graduate student assistant Clifford Berry, created the Atanasoff-Berry Computer (ABC) with an Iowa State University grant of $650. The computer weighed over 700 pounds and could solve up to 29 simultaneous linear equations.

1943

In 1943, Walter Pitts and Warren McCulloch analysed networks of idealised artificial neurons and presented how they might perform simple logical functions.

So, these guys were the first to describe what future researchers would call a "neural network", which is a: "process that analyses data sets over and over again to find associations and interpret meaning from undefined data.

Neural Networks operate like networks of neurons in the human brain, allowing AI systems to take in large data sets, uncover patterns amongst the data, and answer questions about it." according to CSU Global.

1950

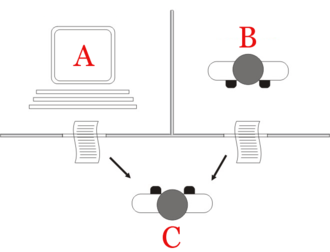

Now, here's one for all you code-breaking fans out there. In 1950 Alan Turing published a landmark paper where he speculated the possibility of machines that 'think'. As a result, he noted that the idea of 'thinking' is difficult to define, so he devised the Turing Test.

This is a test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, a human. The machine's success relies on its ability to give answers to questions, and if the evaluator cannot tell the machine from the human, it will pass the test.

In 1945, Vannevar Bush's paper laid out a vision of AI possibilities, but Turing was different. He was actually writing programmes for a computer to, for example, play chess.

But what stopped Turing in his tracks? Well, before all this would become a reality, computers needed to change and develop massively. Before 1949, computers lacked the ability to store commands, only execute them, a key prerequisite for intelligence.

Plus, computing was darn expensive. In the early 1950s, leasing a computer could cost the unlucky soul up to $200,000 a month.

1951

In 1951, using the Ferranti Mark 1 machine, computer scientist Christopher Strachey (shown below as an early computer printout) wrote a checkers programme, while computer science pioneer Dietrich Prinz wrote one for chess.

One of the students inspired by Pitts and McCulloch was Marvin Minsky who was, at the time of their analysis, a 24-year-old graduate. In 1951 he, with Dean Edmonds, built the first neural net machine called the SNARC. Minksy, as a result, became one of the most important leaders and innovators in AI for the next fifty years.

1956

Five years after Turing's paper, the ideas presented by him were initialised by Logic Theorist, written by Allen Newell, Cliff Shaw and Herbert Simon.

Logic Theorist is a computer programme which is engineered to perform automated reasoning and was designed to mimic the problem-solving skills of a human, funded by the Research and Development Corporation (RAND).

This is considered by many to be the first AI programme and was presented at DSRPAI, hosted by John McCarthy and Marvin Minksy. Plus, It would eventually prove 38 of the first 52 theorems in Whitehead and Russell's Principia Mathematica.

At this conference, McCarthy bought together top researchers from a broad spectrum of fields, imagining a huge collaborative effort needed for the success of the technology. The term AI was coined at this event.

1957-1974

At this point in time, AI flourished. Computers could store far more information. They also became far cheaper. This meant they were also far more accessible.

Machine Learning algorithms also improved, defined by CSU Global as:

"A specific application of AI that lets computer systems, programmes, or applications learn automatically and develop better results based on experience, all without being programmed to do so. Machine Learning allows AI to find patterns in data, uncover insights, and improve the results of whatever task the system has been set out to achieve."

Plus, people got better at knowing which algorithm applied to their problem.

Newell and Simon's General Problem Solver and Joseph Weizenbaum's ELIZA showed promise toward the goal of problem-solving and the interpretation of spoken language. Plus, the US government showed a particular interest in a machine that could transcribe and translate spoken languages, as well as high throughput data processing.

In 1970 Marvin Minsky told Life Magazine, “from three to eight years we will have a machine with the general intelligence of an average human being.”

But while the basic proof of the principle was there, the goals of natural language processing, abstract thinking, and self-recognition were far off.

in 1973, the Lighthill report on the state of AI research in England criticised the field's "grandiose objectives" and failure to meet them. This led to the destruction of AI research in the country. Plus, DARPA was disappointed with researchers working on the Speech Understanding Research programme and cancelled an annual grant of three million dollars.

1974-1980

These years were known as the first AI winter. Yep, there's a second one coming up in a moment.

In the 70s, the field was subject to a bunch of critiques and financial setbacks. AI researchers had not predicted the difficulty of certain problems, clouded by unrestrained optimism. So, the expectation was set so unreachably high that output couldn't keep up. So, funding disappeared.

Plus, researchers faced a number of other issues, including:

- Limited computer power

- Intractability. This is the idea that a problem might be able to be solved in theory, but any solution for the problem takes way too many resources to ever be useful.

- Combinatorial explosion. A combinatorial explosion is the rapid growth of the complexity of a problem due to how the combinatorics (an area of mathematics primarily concerned with counting) of the problem is affected by the input, constraints, and bounds of the problem.

- Commonsense knowledge and reasoning.

- Moravec's paradox. This is the idea that solving problems and proving theorems is easy for a computer, but a simple task like recognising a face or crossing a room is pretty difficult.

- The frame problem. This concerns the application of knowledge about the past to draw inferences about the future. It requires distinguishing those properties that change across time against a background of those properties that do not, which thus constitute a frame.

- The Qualification problem. The qualification problem is concerned with the impossibility of listing all the preconditions required for a real-world action to have its intended effect.

1980-1987

After the Winter comes the spring. So, next, we're jumping into the AI boom

In the 1980s a form of AI programme called "expert systems" was embraced by many companies. So, knowledge became the focus of mainstream AI research.

As Wikipedia describes :

"Knowledge representation and reasoning is the field of artificial intelligence (AI) dedicated to representing information about the world in a form that a computer system can use to solve complex tasks such as diagnosing a medical condition or having a dialogue in a natural language."

In these years, the Japanese government ran head-first into funding AI with its fifth-generation computer project.

The 1980s also saw the revival of connectionism, which refers to the field of cognitive science that hopes to explain mental phenomena using artificial neural networks and a wide range of techniques to build more intelligent machines. This movement stemmed from the work of John Hopfield and David Rumelhart.

This decade also saw the emergence of the "Chinese Room Argument" of John Searle, which tackled the Turing Test. The thought experiment suggests that a digital computer executing a programme cannot have a consciousness, regardless of how human-like it may behave.

TLDR; the Chinese Room Argument proposes a computer that, when provided with Chinese characters, may be able to output a conversation in Chinese that a native speaker would perceive as human-like. But is the computer understanding Chinese, or simulating the ability to understand Chinese?

Searle suggested, if he were in a similar situation, provided with an English translation book, would be able to mimic the language without understanding the words. So, he asserts that there is no difference between the roles of the computer and himself in the experiment.

1987–1993

...And there the enthusiasm goes.

The business communities interest in artificial intelligence fell in the 80s thanks to the good ol' fashioned economic bubble.

This collapse was down to the failure of commercial vendors to develop a vast variety of workable solutions and, as a result, many companies failed. So, the tech was seen as not viable.

But the field carried on making advances. Many researchers began to argue an entirely new approach to AI.

1997

In May of this year, Deep Blue became a chess champ. The system became the first chess-playing AI to beat a reigning world chess champ, Garry Kasparov.

The computer was a specialised version of a framework produced by IBM and was capable of processing twice as many moves per second compared to its first (losing) match. This event was documented on the internet and received over 74M hits.

1998

In 1998, Judea Pearl's book from 1988 brought probability and decision theory into AI.

Among the new tools in use were:

- Bayesian networks. A probabilistic graphical model that represents a set of variables and their conditional dependencies.

- Hidden Markov models. a statistical Markov model ( a stochastic model used to model pseudo-randomly changing systems) in which the system being modelled is assumed to be a Markov process with unobservable states.

- Information theory. The study of the quantification, storage, and communication of information

- Stochastic modelling, a tool for estimating probability distributions of potential outcomes by allowing for random variation

- Classic optimisation. The selection of the best element from some set of available alternatives.

During this time, precise mathematical descriptions were developed for paradigms such as neural networks and evolutionary algorithms.

2009

At the beginning of the 21st century, big data kicked into gear. Thanks to this, cheaper and faster computers and ML techniques were applied to many issues faced by the economy.

This led to McKinsey Institute predicting that:

"by 2009, nearly all sectors in the US economy had at least an average of 200 terabytes of stored data".

2016

Now, jumping forward to 2016. By this time, the market for AI products, hardware, and software, reached more than 8B dollars, with the New York Times reporting that excitement around the tech had reached a "frenzy".

"IBM has spent more than $4 billion buying a handful of companies with vast stores of medical data. “A.I. machines are only as smart as the data you give them,” said John E. Kelly, who oversees IBM’s research labs and the Watson business"

2018

Foundation models were developed in 2018. These are large AI models trained on vast quantities of unlabelled data, which can be adapted to a wide range of downstream tasks. Unlabelled data is data that has not been tagged with labels identifying characteristics, properties, or classifications.

2020 - Now

Recently, AI has hit a fever pitch.

With models such as OpenAI's GPT-3 (read about CEO Sam Altman here) in 2020, and Gato by DeepMind in 2022, milestones have been achieved. Each of these offers humanity a chance to move towards artificial general intelligence.

Having overcome many of the restrictions faced in the past, the rapid growth of AI is closely related to a bunch of technological improvements. These include:

- Larger, more accessible data sets. Without the development of fields such as the Internet of Things, which pumps out a huge amount of data thanks to connected devices, AI would have far fewer potential applications

- GPUs. Graphical Processing Units are critical to providing the power to perform the millions of calculations needed by AI.

- Intelligent Data Processing. Advanced algorithms allow systems to analyse data faster and at multiple levels. This helps the systems analyse data sets quickly.

- Application Programming Interfaces. APIs allow AI functions to be added to traditional computer programmes and software applications, enhancing their ability to identify and understand patterns in data.

So, there we go. From the start to the end of the progression of AI. Many concepts that were purely theoretical only decades ago have now been bought to fruition, thanks to advancements in many fields of tech. The collaborative effort imagined by John McCarthy and Marvin Minksy has come true. Nwah.

With AI being used for everything from automated customer service reps to self-driving cars, there's something new and exciting around every corner. Stay tuned to find out how AI is changing our world and what the future of artificial intelligence holds!